sources

- bad science

- diary of a teenage atheist

- new humanist blog

- pharyngula

- richard dawkins foundation

- sam harris

- skepchick

- richard wiseman

Powered by Perlanet

Over the last several years, a man named Darren Beattie has been busy on Xitter promoting a crude, ignorant form of eugenics (even “sophisticated” versions of eugenics are deplorable and wrong, but Darren favors the ugliest kind.)

Population control? If only! Higher quality humans are subsidizing the fertility of lower quality humans.

When a population gets feral, a little snip snip keeps things in control. Could offer incentives (Air Jordans, etc.).

Pay smart people to have more kids, disincentivize stupid people from having kids. So simple but molds destiny on deep intergenerational level.

In September 2023, he responded to news about migrants from Africa who rioted in Israel by suggesting that the Israeli government “literally just round them up and drop them in the ocean.”

Let the ‘human rights groups’ whine… drop them in the ocean too!

It isn’t “politically correct to say, but low-iq, low-impulse control populations lack higher reasoning and moral faculties. They require strict corporal punishment and threat of violence to function properly within a society. Instead of anarcho-tyranny, we need Singapore for the dumb and violent, and Sweden for the more elevated.”

The same low-IQ trash who watch the fast and furious franchise. Beginning to wish the whole population reduction conspiracy were true.

Competent white men must be in charge if you want things to work. Unfortunately, our entire national ideology is predicated on coddling the feelings of women and minorities, and demoralizing competent white men.

You may say “So what? Trolls like that are a dime-a-dozen on Xitter” or maybe “Typical mindless bot.” Except…he actually has an official position in the Trump administration.

He was a speechwriter (!!!) for Trump in his first administration, but got fired when people noticed that he was attending white nationalist conferences. It was a simpler time, when a few people in the White House could be slightly embarrassed by overt racists.

Don’t worry that Darren might have gone hungry — he was quickly hired by Matt Gaetz as a speechwriter.

And then Trump won his second presidential race, all concern for propriety vanished, and Marco Rubio snatched up this eloquent writer and promoted him to Under Secretary of State for Public Diplomacy and Public Affairs, and Trump made him acting president of the United States Institute of Peace. “Diplomacy” and “Peace” are not words I would apply to him, but Trump and Rubio think different.

Beattie has since been replaced as Under Secretary of State by Sarah Rogers, a former lawyer who worked for Philip Morris to protect them from litigation, and then worked for a law firm specializing in clients like the National Rifle Association, tobacco companies, and venture capitalists in AI. She seems to be much more muted and diplomatic than her predecessor, but I still wouldn’t trust her.

He’s still president of the Donald J. Trump Institute of Peace. I don’t know what he does there. Go out for lunch with Stephen Miller?

If you want to know more about the Republican agenda, talk to Darren Beattie.

Hell no, I said. He also recommended a physical therapist, which I think is a good idea. I was just so annoyed that I had to rant for a little bit.

This government is clearly planning to put boots on the ground in Iran. They’re declaring that it is Jesus/Trump’s will to launch Armageddon now.

A combat-unit commander told non-commissioned officers at a briefing Monday that the Iran war is part of God’s plan and that Pres. Donald Trump was “anointed by Jesus to light the signal fire in Iran to cause Armageddon and mark his return to Earth,” according to a complaint by a non-commissioned officer.

From Saturday morning through Monday night, more than 110 similar complaints about commanders in every branch of the military had been logged by the Military Religious Freedom Foundation (MRFF).

The complaints came from more than 40 different units spread across at least 30 military installations, the MRFF told me Monday night.

When our military leaders start using apocalyptic language at the start of a war, worry.

“This morning our commander opened up the combat readiness status briefing by urging us to not be “afraid” as to what is happening with our combat operations in Iran right now. He urged us to tell our troops that this was “all part of God’s divine plan” and he specifically referenced numerous citations out of the Book of Revelation referring to Armageddon and the imminent return of Jesus Christ. He said that “President Trump has been anointed by Jesus to light the signal fire in Iran to cause Armageddon and mark his return to Earth.”

— MRFF active duty NCO client, writing on behalf of themself and 15 other unit members

Let’s hope that this doesn’t happen and that we aren’t sifting through the ashes looking for something to eat next week. And if it doesn’t happen, every single one of these goddamn bible-thumping murderous commanders must be dismissed and drummed out of the military in disgrace.

While we’re at it, can we also get the names of all the White House personnel who are currently lining up to put inside bets on the date of the invasion on Polymarket? They need to be jailed or executed, too.

Mano Singham considers an essay from one of those people who say they were an atheist, but have now returned to their faith. Mano treats it thoughtfully and respectfully, and I can appreciate that, but nowadays my response to such a claim is “You’re full of crap, bye.”

I know, I’m a bad, rude person.

Unfortunately, it seems like even the most fervent, fanatical televangelist has a similar story about having been a heretical wastrel in their youth, but then they found Jesus and are now saved. It’s part of a redemption arc, and also part of a slur against atheists, that they only deny God because they are immature and hedonistic and haven’t thought seriously about faith.

I think Mano has it exactly right.

I left religion for purely logical reasons. not emotional ones. I found that however hard I tried, I just could not reconcile the scientific view that everything occurs according to natural laws with the traditional religious view that seemed to require an entity that could bypass those laws to act in the world to change the course of events. It took me a long time to overcome the emotional attachment to the religious beliefs that I had. So while I can understand how logical reasoning can make one leave religion, I cannot see how it can drive the reverse process, as Beha seems to desire.

Same here, except that my family faith tradition didn’t have much of an emotional attachment to Christianity, so shedding it was relatively trivial. I agree, though, that there are no good rational reasons to compel return to a faith, which is why I reject any attempts to rationalize it. It feels good to you, it connects you to friends and family, you have fond memories of your time in church…that’s fine. I believe you. Go ahead, I’m not going to deny your feelings. But if you try to tell me you have compelling, logical, scientific reasons to believe in a god, I’m going to tell you you’re full of shit.

This guy, Christopher Beha, has his own simple excuse.

To ask “How am I to live?” is to inquire as to not just what is right but what is good. It is to ask not just “What should I do?” but “How should I be?” The most generous interpretation of the New Atheist view on this question is that people ought to have the freedom to decide for themselves. On that, I agreed completely, but that left me right where I’d started, still in need of an answer.

That’s about as superficial a rationalization for becoming a Catholic as I can imagine. Why become a Catholic? Because you need someone to tell you what to do. Maybe Mr Beha should then ask, “Why should I trust this guy in a clerical collar or this holy book to know what I should do?” He’s not looking for an answer, he’s looking for an authority.

The more complete interpretation of the atheist view is that there is no one to tell you what to do with your life. And anyone who is telling you otherwise is lying to you.

The Lancelet recently published an editorial condemning RFK jr, titled Robert F Kennedy Jr: 1 year of failure. That link will give you the entire thing — I might as well, since quoting the parts that tear into his record and his policies gives you about half of the whole article anyway. He has been a disaster.

10 days after his speech about trust and openness,

HHS rescinded a 54-year-old policy of soliciting public

comments for new rules and regulations, silencing

the voices of many of the stakeholders he pledged

to serve. Kennedy has summarily dismissed advisers

and experts, communicated policy changes on pay-

walled media, fired a whistleblower, and overseen

the revisions of guidelines and recommendations,

contradicting decades of established science, often

to the benefit of industries he formerly condemned.

Under Kennedy’s leadership, the National Institutes

of Health (NIH) shuttered programmes studying the

health effects of air pollution, HHS withheld a report

linking alcohol consumption to cancer, and the Food

and Drug Administration (FDA) withdrew warnings

of potential harm from consuming products (such

as raw milk and chlorine dioxide) falsely marketed as

treatments for autism. His changes at CDC have driven

26 states to reject official guidance on vaccine policy,

and in December the CDC awarded an unsolicited

$1·6 million grant to conduct a vaccine study in Guinea-

Bissau that raised so many ethical concerns—the design

would have risked exposing thousands of unvaccinated

children to hepatitis B—that it has been compared to

the infamous Untreated Syphilis Study at Tuskegee.

HHS under Kennedy has made a habit of throwing

good money after bad science. Amid the Trump

administration’s cuts to research funding and personnel

there has been a harmful shift in priorities. Cutting-

edge discoveries and clinical investigations—on

subjects ranging from mRNA vaccines to diabetes and

dementia—are denied crucial resources while junk

science and fringe beliefs are elevated without justifiable

explanation. Under Kennedy’s leadership, politicisation

at the NIH, FDA, and CDC is imperilling the future of US

science and innovation and throttling the public health

enterprise that keeps the country safe today.

And then, the consequences! Growing measles and pertussis epidemics, worsening maternal morality rates, ridiculous campaigns against food dyes while ignoring capitalisms malignant promotion of processed food. He’s a train wreck that leaps from track to track smashing every benefit of the scientific and educational infrastructure of the country. We’ve replaced NIH and NSF with TikTok.

We have to get rid of this guy.

Despite these developments, Kennedy has continued

to spread misinformation and push politicised agendas

at the expense of the country’s most vulnerable. When

called to account for his decisions by Congress, he has

been evasive and combative. The destruction that

Kennedy has wrought in 1 year might take generations

to repair, and there is little hope for US health and

science while he remains at the helm. Calls for his

resignation now number in the thousands. Congress

must exercise its duty of oversight and hold Kennedy

accountable for his record, or else accept responsibility

for endorsing President Trump’s decision to let him “run

wild on health”.

Unfortunately, even if we drop-kicked his leathery ass into a sewage pool today, Trump has another fraud queued up for the position of surgeon general, Casey Means (she is not a doctor, despite the media habit of gluing that prefix on her name). Don’t let her get any power, or we’ll have to go to all the trouble of excising her like an infected cyst.

Back in the day, when online dating profiles contained more than a few sentences, I once put the phrase “enjoys the films of Wes Anderson” in my bio. Alongside the tattoos and my coffee addiction, it felt like a solid signifier of what kind of girl I was. Wes Anderson fans could be counted on to enjoy the unexpected and the twee, taking pleasure in the details and a well-chosen colour scheme.

These days, this same fact would reveal me as an ageing hipster, but judging by the turnout at Wes Anderson: The Archives – a retrospective currently showing at the Design Museum in London – there’s a lot of us. I visited a month after it opened, and it was still sold out for the day. But there’s plenty of time to attend – it’s in London until 26 July, before moving onto other cities worldwide.

This is a show for film geeks, for sure, but one that should also appeal to connoisseurs of design, and anyone with a love of craft and a curated aesthetic. On the surface, it’s a retrospective that allows us to study the archives from the director’s 30-year career, starting with the mid-90s Bottle Rocket before concluding with last year’s The Phoenician Scheme. We’re treated to more than 700 objects, from the icing-pink model of The Grand Budapest Hotel to the lefty scissors “used in self-defence” in Moonrise Kingdom. Anderson’s overarching topics are dysfunctional families, innocence lost, grief, and obsessive characters on a mission, all styled in an exacting, deadpan manner that’s somehow also very funny. Alongside the objects, screens play clips and music, presenting little set pieces from each film.

Some items feel like a celebrity sighting – I experienced a genuine thrill on encountering the Fendi fur coat that Gwyneth Paltrow wore in The Royal Tenenbaums. Ditto the incredible animal-painted suitcases that feature prominently in The Darjeeling Limited, made by the fictional brand François Voltaire (but actually made by Marc Jacobs, in his Louis Vuitton days). The extreme attention to detail is simply stunning – like how Anderson made sure the ink used to tattoo Mayor Kobayashi in Isle of Dogs is the exact type favoured by the Japanese yakuza, even though hardly anyone would be able to tell.

We’re taken deep into Anderson’s world and we’re learning a lot, but rarely does the director reveal himself – we’re left to interpret his intentions through the objects on display. I gather he’s a man who’s exact (his notebooks, which are small, cheap and spiral-bound, are filled with neat block handwriting). He’s uncompromising about detail (he once held an audition for left-handed children just to write notes for Suzy, she of the lefty scissors). He knows what he likes (while refined over time, his aesthetic was there from the start; Bottle Rocket, a heist film that’s really about friends growing apart, is visually framed in the same exacting way as all the rest). He’s not really widened his scope over time, but maybe that was never the point: this is about honing the form, slowly and meticulously, over a lifetime.

Born and raised in Texas, Anderson first met actor Owen Wilson at the University of Texas at Austin. Wilson appears in many of Anderson’s films, one of a list of regular actors he keeps returning to. “I don’t know who gravitated toward whom,” Anderson told the New York Times in 2021. “But as soon as Owen Wilson and I started making a movie, well, I wanted Owen to be involved with the other movies I would do. As soon as I had Bill Murray, I wanted him on the next one. I wanted Jason Schwartzman. It was natural to me.” This preference of familiarity extends beyond just actors – Anderson’s younger brother, Eric, is a painter whose works often appear in his films, while filmmaker Roman Coppola has worked with the director on several recent scripts.

Now 56, Anderson’s been doing his thing for 30 years, and keeping it the same has certainly aided his journey as an auteur. Although he has on occasion crossed the line to become a parody of himself – in Asteroid City, twee charm is not quite enough to suspend disbelief about a vending machine selling real estate. But I suspect Anderson would just say he’s doing what he likes. “I’ve done a bunch of movies. And it’s a luxury to me that they’re all whatever I’ve wanted them to be,” he once told the Observer. To this day, he puts out films that walk the line between the ridiculous and profound, in a way that somehow slips past even the most determined cynicism – it simply feels delightful. (And if you disagree, let me quote my step-daughter: “Is it because you hate fun?”)

The criticism for centering white, well-off characters is harder to brush off. But he’s rarely too sympathetic to his characters, often anti-heroes who are lost, stuck or reeling from recent bereavement. This criticism is most often directed at The Darjeeling Limited, where three rich American brothers blunder their way through India. But their cluelessness is a key point of the film – the trope of the white person going to India, where they try and fail to reach some sort of enlightenment. Anderson’s characters often demonstrate naive motivations and an obsession with what they desire, while struggling to fully grasp what’s going on around them – like a lot of us, one might argue.

Of course, most of us have to work out our issues without exploring quite as many exciting foreign locales, and certainly with far fewer decadent objects and stuffed exotic animals around. Leaning hard into the quirky details is part of Anderson’s process – it’s not a shark but a jaguar shark in The Life Aquatic, faithfully represented in miniature papier-mâché. And nothing speaks to his love of detail more than stop-motion – he created not just one full-length feature using this Sisyphean method, but two.

I think this attention to detail and craft is a big part of what audiences love about Anderson’s work. We live in the era of “enshittification” – a term coined by the author and technology activist Cory Doctorow to describe how our online experience is steadily getting worse, as platforms turn their focus to profits. When everyone seems to be cutting corners, it feels good to know that some processes are uncompromising. In the age of speedy video clips, where a minute is considered long, it’s refreshing that films are still being made that are going to take as long as they take. Fantastic Mr Fox took a gruelling two years, as the stop-motion figures were moved by hand, up to 24 times per second. The worlds that Anderson creates are an invitation into a more embodied time, where all phones are wired into the wall and the idea of asking a computer for its opinion would be absurd. At the core of his obsession lies a simple beauty, and when we stand in front of it we recognise something that is human and real.

The exhibition doesn’t dwell too much on the how and why of it all. Instead, we are invited to simply admire, for example, the mismatched red hats from The Life Aquatic. But maybe the withholding of context, and biographical information, is part of the point. (“The film is the thing,” director David Lynch once said when pressed to explain himself; everything he wanted to say was already on the screen.) In interviews, Anderson is described as reserved and polite, reluctant to talk much about himself but very eager to get into the nuts and bolts of his projects, so it’s fitting that the show is no different.

There are still some hints of the man behind the screen. There’s a miniature of the book Fantastic Mr Fox on display as part of the props from the film – we’re told it’s an exact replica of the edition the director had as a child. Anderson has commissioned lots of book covers to be used in his films, but this time he chose something from his own history. We’re not told why, but we can imagine.

I’m left enthralled by the exactness of it all, imagining the director in deep concentration as he chases down some rabbit hole, riding the flow of focus, seeking discovery and his own idea of perfection. Anderson is always going to be the one who cares the most. He’s doing it for the love of the craft – and thankfully, we get to watch.

This article is from New Humanist's Spring 2026 edition. Subscribe now.

Here is a quick and easy way of boosting your motivation….

There was a long running rivalry between tennis stars Andy Murray and Novak Djokovic. In 2012, they faced one another in the final of the US Open. Murray took the first two sets but Djokovic battled back to win the next two sets. Murray took a toilet break and, according to several reports, looked in the bathroom mirror and shouted “You are not losing this match…you are not going to let this one slip’ Murray then walked back out and faced his opponent for the deciding set.

In 2014, psychologist Sanda Dolcos (University of Illinois) carried out some great experiments exploring how motivation is affected by whether we talk to ourselves in either the first or second person.

In one study, she had people try to complete some difficult anagrams. Some participants were asked to motivate themselves by using first-person sentences (“I can do it”) whilst others gave themselves a second-person pep talk (“You can do it”). Those using the ‘you’ word completed far more anagrams than those using the ‘I’ word. Dolcos then asked other people to motivate themselves to exercise more in either the first person (‘I should go for a run now’) or second person (’You must go to the gym’). Those using the ‘you’ word ended up feeling far more positive about going for a run or visiting a gym, and planned to take more exercise over the coming weeks.

Whatever the explanation, it is a simple but effective shortcut to motivation. And it worked for Andy Murray because he broke Djokovic’s serve, won the shortest set of the match, and emerged victorious.

When you are in need of some fast acting and powerful motivation, talk to yourself using the magic ‘you’ word. Tell yourself that ‘you can do it’, that ‘you’ love whatever it is that you have to do, and that ‘you’ will make a success of it. You may not end up winning the US Open, but you will discover how just one word has the power to motivate and energise.

Dolcos, S., & Albarracin, D. (2014). The inner speech of behavioral regulation: Intentions and task performance strengthen when you talk to yourself as a You. European Journal of Social Psychology, 44(6), 636–642.

Connor Tomlinson is 27 years old, a regular commentator on GB News and a self-described “reactionary Catholic Zoomer”. But this wasn’t always the case. In a YouTube interview from 2024, Tomlinson says he was baptised as a baby but was “not a regular church attendee”. It was only, he says, in 2019 when conservative Christian friends inspired him to “make the grown-up decision to just believe it and see what happens” that he started attending weekly mass and was confirmed as a Catholic.

Soon afterwards, he began writing opinion pieces for Conservative media outlets before becoming a contributor to a group called Young Voices. Supported by the US-based Koch Foundation, the group helps to place young, right-wing commentators in prominent media roles. Since then, Tomlinson’s social media channels (he has more than 100,000 followers apiece on YouTube and X) have hosted hundreds of videos from a conservative Catholic perspective – ranging from rants about immigration to chats about everything that is supposedly wrong with women.

In one YouTube clip from 2023, Tomlinson and disgraced priest Calvin Robinson claimed that “seeking attention on social media [was] a form of digital infidelity … makes your man feel unwanted, and profanes the sanctity of your relationship”. Criticising both liberal and conservative women, whom Tomlinson referred to as “titty Tories”, they suggested that posting selfies online negated any worthwhile work these women may have done and advised them, quoting from the New Testament, to focus on “finding a good husband”.

Tomlinson and Robinson – and other Christian right-wing figures espousing misogynistic views – are gaining a disturbingly large following of young men, including in the UK. Over the last 20 years, the political divide between young British men and women has grown, with the former more likely to identify as right-wing and, according to a 2024 study from King’s College London, to feel negatively about the impact of feminism.

There has also been a growing narrative around disaffected young men turning to religion. Last year, the Bible Society reported a five-fold increase in the number of 18-24-year-old men attending church services in the UK since 2018. The report was based on self-reported data, and doesn’t reflect an actual rise in recorded attendee numbers, but it was nonetheless seized on as evidence that this demographic is turning to faith.

What does seem to be true is that parts of the “manosphere” – the umbrella term for men’s rights activists, pick-up artists, incels and others – are going Christian (or claiming to). It’s a shift that is being leveraged to gain legitimacy and influence for their misogynistic ideas in the outside world – including in political circles, such as via Tomlinson’s close friend and former Reform UK party candidate, 29-year-old Joseph Robertson.

Women and the downfall of humanity

Whereas the leaders of the manosphere often express a blatant hatred of women and a focus on manipulative pick-up techniques, figures like Tomlinson rely more on ideas of the perfect Christian family. But dig a little deeper and the lines between the two worlds begin to blur.

Take US author and so-called “Godfather of the Manosphere” Rollo Tomassi (real name George Miller). Tomassi, who gained fame with his “Rational Male” book series, teaches his followers that women with multiple sexual partners are “low-value”. He has also written that women use sexism as an excuse for their own unsuitability for professional careers.

For Tomlinson, women are unfit for doing 9-5 jobs, because they are “impressionable, led by material incentives and easily manipulated”. Therefore, he claims, it is the job of a man to “be the vanguard against the degeneracy that has wasted so many fertile years and the potential of women who are not ugly, who could’ve been someone’s wife”. Both he and Robertson are keen to blame all manner of societal problems on the contraceptive pill.

The only real difference is that, for Tomassi, these misogynistic beliefs legitimise men using and abusing women because that’s what “nature intended”. Meanwhile, for Tomlinson and other Christian figures, it compels men to take women under their wing and teach them how to live because that is “God’s plan”. In both worldviews, women are responsible for the downfall of humanity and only valued when serving the whims and needs of men.

But despite this overlap, both Tomlinson and Robertson are strongly critical of the manosphere. Tomlinson believes it “perpetuates the paradigm of the industrial and sexual revolutions ... which made men and women so maladaptive and unattracted to each other in the first place”. Robertson appears to agree with this and has written florid critiques of self-appointed manosphere leader Andrew Tate and the perils of pornography.

When we reached out to Tomlinson about his statements and their inherent misogyny, he told us that: “The time I spend with my wife, mother, grandmothers, friends, etc. matters more to me than if someone I don’t know calls me mean on the internet … Men should be considerate, compassionate, and chivalric toward the women they love and who love them. Women should return that compassion and consideration in kind. We may do it in different ways, but that’s the core of it. Ideology obscures those relationships, makes people think in zero-sum terms about competing categories, and is making people miserable … So you’re welcome to engage with what I’ve written and said throughout my career, and I hope you do so in good faith. But I don’t feel compelled to defend myself against such a bad faith charge as ‘You’re a misogynist.’ I would rather spend time with my wife.”

The "God pill"

Tomlinson may want us to think that his religious beliefs are in no way misogynistic. But the reframing of manosphere theories as the “Christian” way to think and live has been going on for several years, and is evident when we look at the trajectory of certain figures within the manosphere. Daryush Valizadeh (aka Roosh V) was one of the first. A key figure in the early manosphere, he taught a version of Tomassi’s beliefs as a pick-up artist – a man who uses coercion and manipulation to pick up women – via his lucrative Return of Kings website. In 2019, after disappearing from the scene, he returned with a blog post explaining that he had “received a message while on mushrooms” and was taking the movement in a new direction.

Having replaced the so-called Red Pill (a trope where “taking the Red Pill” reveals the unsettling truth of reality, in this case revealing women’s “true intentions”) with the “God Pill”, his commitment to misogyny remained the same, only now it was fueled by the teachings of the Russian Orthodox Church. While the rebrand lost him many fans, it opened the door for a new wave of manosphere influencers keen to present their misogyny as piety. No longer seeking to humiliate women by coercing them into sex, they were now “good guys” working to reinstil the supposed natural order of things.

Since then, other men have come into this space; like Tomlinson, Robertson, Calvin Robinson and other protege’s of far-right student organisation Turning Point UK. Justifying their hatred of women via pseudo-intellectual arguments and with reference to Christian teachings, they are far more appealing to men who wish to subjugate the other sex while avoiding associations with the bare-faced aggression and criminality of figures like Andrew Tate.

Even more alarmingly, convincing themselves and others that they are educated men working for God, these men have muscled their way into speaking engagements and onto national news channels like GB News and Talk. The groups they associate with include the anti-LGBT Christian advocacy group ADF Legal (which advised the Orthodox Conservatives, a pressure group with which Robertson is closely involved); the Family Education Trust, a group with evangelical ties whose policy suggestions have made it into parliamentary discussions (Robinson and Tomlinson attended their conference last year); and anti-abortion group Right to Life, for whom Tomlinson, Robertson and Robinson are all advocates. These groups seek to limit access to abortion, prevent children from learning about the LGBT community and push Christian and traditionalist beliefs into policymaking.

Framing their arguments as the only way to “save” the west, the people who truly benefit are white Christian men and the women who obey them. Feminists, people of colour, Muslims, the LGBT community and others have no role in their brave new world.

What's the appeal?

Many of these men also deny that they are “far right”, while holding beliefs in line with both ethnic and Christian nationalism. Ethno-nationalists believe that identity is based on ethnicity and culture, whilst Christian nationalists believe that Christianity is the only way to save race and nation. In both cases the words “race”, “culture” and “nation” relate only to the white, western world.

Examples of both are present in most of Tomlinson’s output. In an interview with the Daily Heretic, a YouTube channel hosted by journalist Andrew Gold who focuses on “culture war” topics from a right-wing perspective, Tomlinson said he was not an ethno-nationalist. But he also claimed that former Prime Minister Rishi Sunak was not “nationally English” and that only those who could trace their British ancestry back to the Neolithic era were truly British. He believes that Britain is a Christian country; a bold claim given that less than half of those asked on the most recent census identified as Christian and 37 per cent claimed no religion at all.

These attitudes appear to be spreading, and are voiced by some Reform UK party candidates, including Joseph Robertson. In a video posted to X last year, Robertson claimed that “the natal crisis gripping the west” was happening because “the sacred act of motherhood ... has been demeaned and discarded” by feminists, which has “disrupted the natural harmony between men and women” and was “derailing the proper course of western history”.

Part of the appeal of these figures comes down to delivery. Whilst the angry rants of Andrew Tate are easily dismissed, the calm manner in which Tomlinson et al present their ideas makes them more accessible. Whether they are telling their audience that they need to “bring back women shaming women” to enforce modesty, or that men should be “the rock upon which [their wife’s] emotional waves may break”, it is delivered with the calm confidence of a Man Who Knows What He Is Talking About.

And while many manosphere leaders are actively grifting their audience, Tomlinson and his cohort appear to believe their own hype. These are not angry old men who will wrap themselves in the flag while getting arrested alongside Tommy Robinson; they present themselves as young, relevant “intellectuals”. For an audience of lost young men, uncertain of their role in life, that is more than enough to trust them.

Crisis of belonging

In the course of writing my book Incel: The Weaponization of Misogyny, I have had extensive conversations with youth leaders and teachers. They have shown that there is a clear crisis of belonging among boys and men in their teens and early twenties. They have friends, but the friendships stop at the school gates – and a lack of community spaces sees them confined to their bedrooms and screens. The algorithms are designed to promote the most lucrative content, which is often the most controversial.

Faced with a polarised, rage-filled digital world, which punishes young men as either “Nazis” or “soy boys” (progressive men who are deemed less masculine because they care about women), it is possible to see the appeal of the Christian manosphere’s calm utopia, which seems to offer a middle ground.

But aside from increasing support for the far right and growing levels of misogyny, Tomlinson et al are offering young men a version of a life which is both socially and financially unfeasible. For the vast majority, it is no longer possible to survive on a single income. As with the tradwife movement, which sees influencers advocating for women to quit their jobs, marry and start a homestead, Christian manosphere figures are recommending that young men seek out a simple life with a simple wife, while they themselves walk the corridors of power funded by podcast subscriptions.

In the offline world, parts of the Christian establishment are taking steps to separate themselves from these divisive figures and boost their image as welcoming, egalitarian spaces. Last year, Calvin Robinson was dismissed from a US diocese of the Anglican Catholic Church after he appeared to mimic Elon Musk’s “Nazi-style salute” at their National Pro-Life Summit, and he has never been ordained in the Church of England despite completing his training at Oxford. A year earlier, the Free Church of England fired one of their reverends for posting multiple online videos in which he criticised “woke” topics and referred to progressive female ministers as “witches”. And the new Archbishop of Wales is an openly gay woman.

But for those in the Christian manosphere, this simply provides further proof of the British Christian establishment “going woke” and will see them drift deeper into extreme orthodoxy to justify their own positions.

Thankfully, there are many secular groups working with young men. Last year, Mike Nicholson, CEO of the group Progressive Masculinity, told me how his team were going into schools and creating spaces for young men to talk about issues in the online world. Explaining that they “don’t teach boys to be men” but “give boys the agency and the freedom to design the man that they want to be”, Nicholson said that when boys were challenged on harmful beliefs in a safe environment the response was “phenomenal”. He believes in the value of discussion. “This idea that boys and men don’t like to talk is an absolute fallacy,” he said. “In the right spaces, somewhere they feel safe, they love to.”

Parents concerned about their sons can also improve their communication. The PACE model, developed by an educational psychologist to help children through trauma, can be adapted to almost any family situation. An acronym that stands for Playfulness, Acceptance, Curiosity and Empathy, it describes how to approach concerning child behaviour without showing fear or censure.

Young men are bombarded with digital hate on a daily basis. It is our job to provide them with a nurturing, safe environment in which they can be encouraged to think critically about online content and the way they interact with it. It’s vital to remember that young men consuming harmful content are seeking answers to internal questions, not validation for pre-existing beliefs. If we engage with them, we can lead them to healthier sources than the Christian nationalist movement.

This article is from New Humanist's Spring 2026 edition. Subscribe now.

It’s an old, old story: Nasty Unfaithful Sex-Crazed Man treats Poor Nice Monogamous Woman like crap. Everything explodes, and they all live divorcedly ever after. This is also the plot of singer Lily Allen’s latest album, West End Girl. Only here it has a fresh new twist: Nasty Unfaithful uses non-monogamy as a cover for his shitty behaviour, forcing Poor Nice Monogamous to (kind of) consent to him seeing other people.

Non-monogamy is becoming increasingly popular, with the growing acceptance that having only one romantic and sexual partner isn’t for everyone, and that there are other valid ways to conduct relationships. Advice about how to do it ethically isn’t hard to find: there are hundreds of books, websites, therapists and communities out there. The narrative arc of West End Girl is an absolute disaster movie, and an entirely predictable one. It’s a caricature of what to avoid: the bad communication, the fact that one partner clearly hates the whole idea and is being pressured into it, the attempt to implement rigid rules in order to “tame” the extramarital encounters. (The rules fail to do this, and are promptly broken anyway.)

But the story resonates deeply for so many listeners precisely because it is familiar. Anyone who’s spent time in non-monogamous circles (or, frankly, on any online dating platform) knows this story too, and these men. Every woman who ever fell for a modern Nasty Unfaithful can see herself in West End Girl, and now she has a whole album of (absolutely belting) new tunes with which to vent her feelings. So far so innocuous: new generations need new versions of old stories.

And this is a story. Allen describes West End Girl as “autofiction”. Crucially, this means it’s not autobiography: it’s a collaboratively made album, and some of the content is made up. The main character (Poor Nice Monogamous) is an alterego of Allen. She is the reluctant partner “trying to be open”, trying to be something she’s not. So many of us have been that woman, trying to defang our fears of abandonment with some kind of self-improvement project.

Did Allen the flesh-and-blood human really do the things the character does in these songs? Did her real husband, or the real “other woman”, do the things described? We’re invited to imagine that they did. The lines between real people and fictional characters are blurred in exceptionally clever ways.

Social media is part of the album, as well as part of the broader media landscape that feeds and is fed by it. Instagram, Tinder and Hinge all show up in the lyrics. The proximity of the album’s launch to the release of the last season of Stranger Things – starring Allen’s erstwhile husband – extended the tendrils of the Upside Down into the West End Girl storyline. The album’s promotional materials have caused a stir, particularly the specially branded butt-plug USB drives – a call back to the now infamous song “Pussy Palace”, where Allen’s alterego describes discovering her husband’s sex toy-laden New York City pad (which, again, may or may not be a real event).

Meanwhile, the storytelling itself gently invites the listener to blend life with performance: the first song on the album is about theatre and acting, and the inciting incident for the entire drama is that the narrator gets cast as the lead in a play, as Allen was in real life. The second song then describes a question posed by Nasty Unfaithful as a (fucking) “line”.

If all the world’s a stage, maybe all the world’s a concept album too. Where does West End Girl begin or end? And why does it matter?

As individuals, we relate the stories we consume to our own bodies, our own senses of self. The labels we use to refer to ourselves are tools of self-creation – be they dating app pseudonyms, metrics like height and age, or social roles (the Allen character describes herself variously as “mum to teenage girls”, “modern wife” and “non-monogamummy”). These are the ingredients for alchemising life and people into recognisable narratives.

In our late capitalist economy, those narratives are often structured by what or whom we possess, and what or who possesses us. As Allen sings: “You’re mine, mine, mine”. When you approach a relationship as a casting of another person in the role of your partner, you objectify them: they become supporting cast in the drama of your life.

Meanwhile, of course, they are busy doing exactly the same thing to you. Existentialists and romantic partners Simone de Beauvoir and Jean-Paul Sartre disagreed on whether heteroromantic love could ever be more than a power struggle, each trying to “win” by objectifying the other. Beauvoir was the more optimistic of the two, but remained in a life-long open relationship with Sartre regardless. In Allen’s penultimate song, the narrator refuses to let her (ex-) husband “win”.

But this is about more than individuals. Media narratives play a key part in the social construction of romantic love itself, as well as in the construction of gender roles in romantic relationships. When we keep telling the same story over and over, we strengthen the grip of existing scripts.

West End Girl plays into the standard narrative: it’s men who want sex and therefore non-monogamy. Women who agree to it to try and make men happy will get screwed over and it will all end in tears. It’s obvious that misogynistically practised non-monogamy is no better or worse per se than misogynistically practised monogamy. But it’s more comfortable to demonise non-monogamy than to come to terms with the reality (and ubiquity) of misogyny. It’s easier to cling to the myth that monogamy would solve misogyny, if only men could keep it in their pants long enough. In the Paris Review, Jean Garnett calls West End Girl “a rather neat, crowd-pleasing, bias-confirming presentation of nonmonogamy that casts male extramarital libido as the bad guy and Allen as the victim.” Of course it is: that’s the story people want. That’s how you sell albums and concert tickets and branded butt-plugs.

Which brings us back to capitalism, and the sheer marketing genius surrounding West End Girl. The album is a giant invitation to chime in. It reads like a friend’s post on social media, on which we are expected to comment “you go girl!” and “yes, men are bastards who just want tons of pussy!” It’s chatty and unchallenging, rather like a patter song from Gilbert and Sullivan’s Trial by Jury, in which a late Victorian incarnation of Nasty Unfaithful gets hauled in front of a law court (and of course the theatre audience) to be judged for his abandonment of a Poor Nice Monogamous, offering in his defence the touching plea that “one cannot eat breakfast all day, nor is it the act of a sinner, / when breakfast is taken away, to turn his attention to dinner.” Edwin, though, is a caricature in a comic operetta. The trial-by-audience of Allen’s characters, and the real people behind them, is not a joke.

Sex sells. And people assume non-monogamy is just about getting lots of sex – an impression not exactly refuted by this album. In reality, non-monogamy takes many forms. Polyamory, for instance, is defined by openness to more than one loving relationship. But West End Girl is not a nuanced treatise on love. It is first and foremost a marketing triumph. Lily Allen and her collaborators are presumably making a lot of money out of all this. Is that, under capitalism, what passes for feminism?

This article is from New Humanist's Spring 2026 issue. Subscribe now.

With the great powers facing off and a global authoritarian slide, we are entering a new era of brazen propaganda. But for all those who keep their heads down, there are many more who refuse to go along with it.

In the Spring 2026 edition of New Humanist, we celebrate the heroes of free thought on the frontlines of the battle against dogma and ideology – from the Belarusian women standing up for democracy despite imprisonment and exile, to the poet translating Orwell into Chinese and the therapists helping people rebuild their belief systems after leaving religious groups.

We also hear from Harvard's Steven Pinker about defending America's besieged universities and historian David Olusoga about why we must revisit the story of the Empire.

Keep reading for a peek inside!

The Spring 2026 edition of New Humanist is on sale now! Subscribe or buy a copy online today, or find your nearest stockist.

Defend the truth!

We hear from six champions of free thought around the world about how to fight back against propaganda and protect the truth – including journalist Andrei Kolesnikov on Russia's last remaining dissidents, campaigner Zoe Gardner on how to fight anti-immigration misinformation, and climate and vaccine scientists Michael E. Mann and Peter Hotez on how everyone can do their bit to defend science.

"Today, harm is being done by the spread of despair and defeatism, some of it weaponised by bad actors like Russia to create division and disengagement. We are, in fact, far from defeat"

Breaking free from belief

A growing number of UK therapists are helping people "deconstruct" their beliefs after leaving high-control religious groups. Journalist Ellie Broughton meets the therapists leading the charge, and the clients working to rebuild their belief systems.

“Over time, losing your religion may start to feel less like a loss, and more like an opportunity to rebuild yourself from the ground up”

Inside the women’s prisons of Belarus

Women played a key role in the Belarusian pro-democracy movement that came to a head in the summer of 2020. More than five years later, hundreds of women are still being held as political prisoners or have been forced to live in exile. And yet, they refuse to give up. Journalist Alexandra Domenech hears their stories.

"Despite the government’s attempts to crush their spirits, the women who have emerged from penal colonies tell stories of defiance and solidarity"

Steven Pinker on America's besieged universities

Harvard's high-profile psychologist Steven Pinker has long argued that a lack of political diversity at US universities is skewing research – but now there's a new and more dangerous threat from the Trump administration. He spoke to us about why it's important to fight attacks on academic freedom, no matter where they come from.

"Professors and students have been harassed, fired, sanctioned and censored for constitutionally protected speech"

David Olusoga on Empire, religion in Britain – and The Traitors

Following the success of his BBC Two series Empire, historian David Olusoga explores the challenges of talking about the British Empire today, the influence of the Church in Britain – and why carefully-crafted logic got him nowhere on Celebrity Traitors.

"Much of the hostility towards anything to do with the Empire is just a desire to not have to hear difficult things about Britain"

Also in the Spring 2026 edition:

- Journalist Katherine Denkinson explores how the manosphere is using God to target young men

- Laurie Taylor digs into how life-changing rethinks can hinge on a single word

- Zion Lights looks at the biomedical breakthroughs giving us a reason to feel cheerful

- We can now study diseases to track people and fight crime, writes Oxford bioethicist Tess Johnson – but should we?

- Richard Pallardy explores the havoc that can follow when farm animals breed with wild species

- Award-winning comedian Shaparak Khorsandi on how Stranger Things united the generations

- BBC Radio 4 presenter Samira Ahmed on the new nouvelle vague

- National treasure Michael Rosen explores the history of the word "climate" in the latest edition of his language column

- Marcus Chown on how our tiny brains are able to make sense of the Universe

- Lily Allen's album West End Girl got everyone talking about non-monogamy – but it tells an old story, argue academics Carrie Jenkins and Carla Nappi

- Jessica Furseth on the undiluted joy of the Wes Anderson archives

- Jody Ray takes a tense but awe-inspiring tour of Libya

- We talk to Russian-British comedian Olga Koch about comedy, class and computers

Plus more fascinating features on the biggest topics shaping our world today, book reviews, original poetry, and our regular cryptic crossword and brainteaser.

David Olusoga is a British-Nigerian historian, author, presenter and BAFTA-winning filmmaker. He is professor of public history at the University of Manchester. His most recent television series “Empire” – which explored the history of the British Empire and its continuing impact today – aired on BBC Two last year.

Let’s start by talking about the BBC series Empire. What was the motivation behind it?

We have conversations about the British Empire in this country as if we are the only people involved, which, given the nature of empires, is not possible. We often ignore the fact that conversations about the Empire are taking place in those many countries that were formerly British colonies.

There was a huge debate about the meaning of the British Empire in India – historians like Shashi Tharoor are kind of superstar historians because their ideas and their writings about the Empire have become enormous. In the Caribbean, there’s incredible scholarship, but also heritage work and memorialisation.

This conversation about the Empire is not a monologue within this country. It needs to be a dialogue with the countries that were colonies or territories of the British Empire. So the aim is to try to explore the story of the Empire as a history that is being revisited by people across the world. Because more than two billion people are citizens of nations that were formerly British colonies or protectorates or dominions.

What has the reaction been like?

Most people’s reaction to most aspects of history is that it’s interesting – not that this is a challenge to who I am, or an insult to my nation. And the viewing figures for Empire – it was one of the most successful factual programmes on British television in 2025 – show that most people are willing to engage in recognising that there’s much about the Empire that we don’t know.

But we do live in a moment when there is a kind of rearguard action to defend an “island story” version of the Empire, which takes any acknowledgement that the British Empire, like all empires, was at times extractive [as an unfair criticism].

What’s your response to this defensive attitude?

The British Empire lasts 400 years. It involves millions and millions of people, and there are all sorts of motivations and actions and behaviours that range from the genocidal to the purely altruistic. But the structure of an empire ... people don’t set sail to set up colonies in order to benefit the people who already own the land. Empires, by their nature, are about the mother country, the imperial power, more than the colonies.

But the expectation is that this is a history that must be dealt with as a piece of historical accountancy – that if you cover [a negative aspect of the Empire, you must cover a positive one]; if you talk about the Indian famines, you must talk about the Indian railways. When I write about the First World War, nobody ever confronts me for talking about the strategic failures of the generals on the Western Front, or the death toll, or the suffering in the trenches. This only happens with the subject of the British Empire, and particularly anything to do with slavery, where we suddenly feel that we have to be talking in terms of “balance”.

Much of the hostility towards anything to do with the Empire is just a desire to not have to hear difficult things about Britain, and they’re only difficult if you have a magical and exceptional view of Britain, that Britain is a nation unlike every other nation that’s ever existed in all of human history.

Does your work on the Empire feel personal? The phrase that people sometimes use is “we are here, because you were there,” right?

I don’t really think about myself that much, because I don’t look to history to give me a warrant to exist in my own country. There is this idea on the far right that the history of Empire and black history are ways of people of colour claiming their right to be in Britain. I don’t feel that needy. I pay my taxes, I contribute to my society. I don’t need there to have been earlier generations of black people in Britain.

However, I do deploy my background within story-telling, because I’ve been making television programmes as a producer and presenter for a long time, and I’m very good at understanding how to use your own experiences to make stories more impactful. So we end the Empire series with me deploying my ancestors to make the point that confronting the history of Empire, recognising it as part of our history, is not something about guilt or pride.

My Nigerian ancestors were in a town called Ijebu Ode in the 1890s that was attacked by the British, so I’m descended from people who were attacked by the British army. But one of my ancestors on my mother’s side was a Scottish soldier in the pay of the East India Company. So, if I did history in the hope of eliciting guilt from white people, I have a rather large Achilles heel.

I’ve experienced something similar myself. I read a review of one of my books about scientific racism, which argued “well, he would think this, because he’s half-caste.” That’s not a word I would use myself. But I still had a moment of self-reflection, asking myself, “would I be making the same arguments if I didn’t have Indian parentage?” I concluded that the answer was yes, but it stung me in a peculiar way.

These arguments aren’t arguments. They’re attempts at silencing people, at saying that certain voices saying certain things are illegitimate. What these arguments are all saying is, “I don’t like what this history is saying. Therefore, it’s not real history.” It is easier to attack the messenger than it is to deal with something that you’re uncomfortable with.

Episode two of Empire was particularly striking for me, because it’s about a story that I think isn’t well told – about this period of indenture. As you know, my great-grandparents were Indian indentured slaves (I’ve made a personal decision to use the word “slaves”) brought to Guyana. Could you describe what happened in this period?

In some ways, after slavery was outlawed, the British went back to what they had before slavery, which was the indenture system. British poor people would sell their labour for a period of years – five, sometimes 10 or longer – and they were transported to the colonies. They would then work for a free settler for those number of years, and at the end of it, they were freed. (If they were lucky; this didn’t always happen.)

But the story of indenture from one part of the Empire to another is particularly unknown because there’s no connection to Britain. In the case of India, somewhere between one or two million Indians left to become indentured labourers. There was a huge range of experiences – from the murderously exploitative to, you know, extremely positive for people being able to transform their lives, escape caste, buy land. It’s a system that lasted from the early 19th century, with some stops and starts, right through until almost the First World War.

Can you tell us about how your family got here?

My father was from Nigeria. He was studying here in Newcastle [when my parents met]. Then my parents moved to Nigeria and I was born there. They then separated and we moved back to the UK. So I’m a 1970s story of movement around former Empire connections, because Nigeria had been a British colony. I grew up in Gateshead, near Newcastle, and it was not a very diverse place in the 70s and 80s. It still isn’t. I was brought up in a white, working-class world with my grandparents and my mum and siblings. I have a strong feeling of affection for the north-east and a strong sense of belonging and identity to that.

What’s your family’s relationship with religion?

My mother worked really, really hard to not ever say what she felt, and we went through a normal comprehensive school system with the sort of religiosity and religious education lessons that you’d expect. It [the existence of God] just always struck me as really unlikely.

But I remember being young and thinking that believing in this stuff was part of being good, and I wanted to be good and to do my homework and not get in trouble and not upset my mum. So there was a feeling of “you should believe in this, and you should say the prayers and sing the carols” and all that sort of nonsense. There was a brief period when I went to Sunday school, because it was what you did if you were trying to be a good boy. But that clashed with the fact that I just always felt it was nonsense.

As I got older, I just wanted nothing to do with it. One of the challenges I’ve had as a historian is that I’m so disinterested in religion. And I’ve had to make myself [engage with it] because you can’t study the past when for almost everybody, part of their calculations, for every action that they took, was what was going to happen to their mortal soul. So I forced myself to read religious history, and particularly around the early Church, which was the university of the world. It was the centre of learning.

What about the relationship between the Church and chattel slavery?

The Church was involved in justifying slavery, but it was also involved both with the abolitionists and with enslaved people themselves, who found inspiration in the biblical stories – the obvious stories of Pharaoh and the escape from Egypt. So it was involved in every aspect. There were religious figures who justified slavery. There were religious organisations and individuals who owned enslaved people, who promoted and propagandised for slavery. And there were religious voices who were motivated by their religiosity to oppose it.

And the influence of the Church today?

I do resent living in a country where it is very difficult to educate your children without them being indoctrinated in religion. I believe in absolute toleration, but I am opposed to religious privilege. I don’t see why we have bishops in the House of Lords, having power in our political system. I don’t accept that collective acts of Christian worship should be imposed upon my child, or anybody else’s child. I also think that religious schools are becoming increasingly a force for disunity in the country.

It felt significant that Tommy Robinson did a Christmas carol service last year, which attracted more than a thousand people, and he’s had support from figures like Elon Musk. What do you think about Christian nationalism in the US, and the efforts to export it over here?

I think it’s people with a lot of money who can’t accept how different Britain is to America. You can’t have Christian nationalism in a country that’s essentially not a Christian country. But if you’re trying to keep your American paymasters happy, if that’s where the money coming in from America to foment greater division in Britain is coming from, then you have to do what your paymaster says.

Last time I went to church, it was young Nigerians and very old white British people. And if you go to a Catholic church in many parts of this country, everyone’s Polish. So if you’re interested in bums on seats, Tommy Robinson is not the obvious conduit to achieve that.

I’d like to see him go to church in north-east London with a big Nigerian community. See how he gets on there. But before we end this interview, we have to talk about something more light-hearted. Your appearance on the The Celebrity Traitors.

I was somehow in the final, which has been one of the most watched moments in recent TV history. It’s been interesting being involved in something, at this very difficult time, that made people really happy. Sadly, what made them really happy was seeing those of us who appeared to be incredibly incompetent. So it would have been nice to have made people happy with competency, rather than incompetency.

Rather than incompetency, wasn’t it that you were making very rational, evidence-based arguments, that were just always unfortunately wrong?

I’ve been trying to think about what happened. I spend my life reading, gathering evidence, and then trying to weigh it out, but when there was no evidence, I entered this kind of desperate starvation state, looking for it. I think my theories were entirely rational, but they were also entirely wrong. So for example when Stephen Fry suggested that we should not have discussions at the roundtable and instead we should just vote, most people went, “That’s interesting, Stephen,” and I went, “That would be really useful if you were a traitor.”

So you went for him.

You know, in lots of Victorian novels, there’s the boring accountant that the young woman doesn’t want to marry, and then the exciting cavalry officer? I feel I proved myself to be the boring accountant.

You’re Daniel Day-Lewis in A Room With a View.

Exactly. I should have been the young guy with my chest out, in the field, kissing Helena Bonham Carter.

Did doing The Traitors help restore your faith in TV? This is an industry that is slowly being eroded, and the BBC is constantly under attack.

Well, it didn’t change the BBC being under attack – we’ve lost a director general since Traitors went out. But it is a phenomenon. When I was a kid, when everyone watched something, the whole nation had been through this shared experience. It’s very rare now. The big TV hits of last year were Adolescence and Traitors; they couldn’t be more different but they both began national conversations.

But my passion for TV is because that was a way I could do history. I had a kind of fork in the road. I had to choose the academic path and the PhD that was in front of me, or do something else, and I wanted to do history in the way that it had enraptured me when I was young, which was watching it on television. The person who made me want to do TV – alongside my history teacher Mr Faulkner – was [the historian and BBC presenter] Michael Wood.

But also, I was brought up in a council estate. I had that deep fear of debt that people brought up with no money have. TV offered me a way of doing history and getting paid. Meanwhile, a sector like academia, in which you have to self-fund the necessary qualifications to advance, is closed to a great majority of the population.

What is it about Michael Wood’s work that particularly inspired you?

He did In Search of the Dark Ages and a whole series of different history projects with the BBC. He was often talking about what we don’t know. And I love the idea of unknowability. That’s what I try to do on television, to get to that precipice of what is knowable and unknowable, and then ask questions of the view.

The magic of history is that you can know enough to empathise with people who lived and died before you were born. You can imagine yourself in their situation, and that connection with people of past centuries is a magical thing. I feel deeply emotional about history. Sometimes I’m in an archive, thinking about someone who’s been dead for 100 years. I’m the only person in the world thinking about their existence.

Talking about humanism, it’s that idea that they [people from history] are exactly the same as me, and that I can imagine what they must have gone through, what the city that they walked through was like, and how different it is from the one of today.

I think exactly the same about evolutionary history. It’s just that the history I study is a bit older than yours. For example, the peopling of the Americas is a big question. And a couple of years ago in White Sands, New Mexico, they discovered fossilised footprints that put the date of people there several thousand years earlier than we’d previously thought. The paper described the prints of a young person, possibly a teenager, going out for a looped walk, which lasts about a mile. And at several points there are the footprints of a two or three-year old, but they come and go. So that’s a person with a toddler, and the toddler’s tired, and you pick it up, and it walks for a bit. Twenty thousand years ago, people were doing exactly what you do in a park on a Sunday morning with your child. That is what you’re talking about, for me.

I remember that. Maybe it’s because I was new to fatherhood when it came out. But just ... the universality!

So, what’s next? Will you be doing Strictly Come Dancing?

It’s been mooted. I think I could do Strictly for one reason, which is that I’ve spent a lot of my life fighting against racial stereotypes. And I could destroy the racial stereotype that all black people can dance. But no, I think the country has suffered enough.

This article is a preview from New Humanist's Spring 2026 issue. Subscribe now.

I recently ran a session at the University of Hertfordshire on the 7 factors that I believe underpin an impactful presentation.

While preparing, I was reminded of a conversation I had with a hugely knowledgeable director who had worked with many magicians. He explained that magicians often weaken their performances by letting the audience experience the magic at different times.

Imagine that a magician drops an apple into a box and then tips it forward to show that the apple has vanished. The box must be turned from side to side so that everyone in the audience can see inside. As a result, each person experiences the disappearance at a slightly different moment and the impact is diluted.

Now imagine a different approach. The apple goes into the box. The lid is pulled off and all four sides drop down simultaneously. This time, everyone sees the disappearance at the same time and the reaction is far stronger.

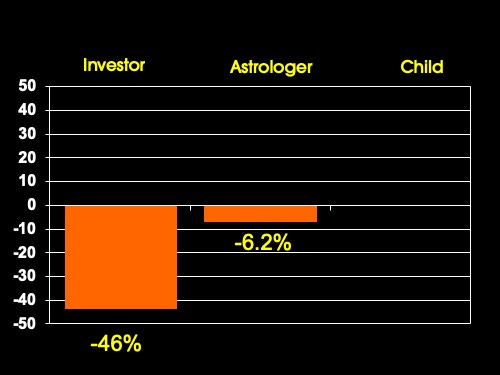

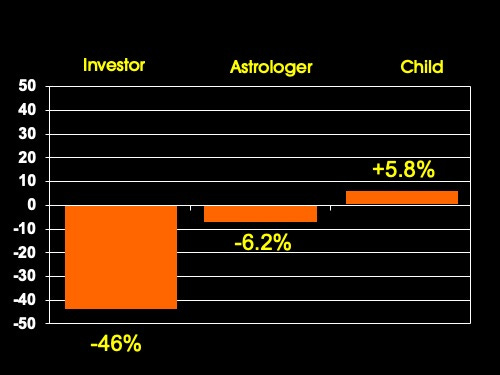

The same principle applies to talks. Some years ago, I ran a year-long experiment with the British Science Association about investing on the FTSE 100. We gave a notional £5,000 to a regular investor, a financial astrologer (who invested based on company birth dates) and a four-year-old child who selected shares at random!

If I simply show the final graph, people in the audience interpret it at different speeds. Some spot the outcome instantly. Others take longer. The moment fragments.

Instead, I build it step by step. First, I show a blank graph and explain the two axes.

Then I reveal that both the professional investor and the financial astrologer lost money.

Finally, I reveal that the four-year-old random share picker outperformed them both!

Now the entire audience sees the result at the same moment — and reacts together. That shared moment creates energy.

It’s a simple idea:

Don’t just reveal information. Orchestrate the moment of discovery.

If you want bigger reactions, stronger engagement, and more memorable talks, make sure your audience experiences the key moments together.

This week we have a quick quiz to test your understanding of sleep and dreaming. Please decide whether each of the following 7 statements are TRUE or FALSE. Here we go….

1) When I am asleep, my brain switches off.

2) I can learn to function well on less sleep.

3) Napping is a sign of laziness.

4) Dreams consist of meaningless thoughts and images.

5) A small amount of alcohol before bedtime improves sleep quality.

6) I can catch up on my lost sleep at the weekend.

7) Eating cheese just before you go to bed gives you nightmares

OK, here are the answers…..

1) When I am asleep, my brain switches off: Nope. When you fall asleep, your sense of self-awareness shuts down, but your brain remains highly active and carries out tasks that are essential for your wellbeing.

2) I can learn to function well on less sleep: Nope. Sleep is a biological need. You can force yourself to sleep less, but you will not be fully rested, and your thoughts, feelings and behaviour will be impaired.

3) Napping is a sign of laziness: Nope. Your circadian rhythm make you sleepy towards the middle of the afternoon, and so napping is natural and makes you more alert, creative, and productive.

4) Dreams consist of meaningless thoughts and images: Nope. During dreaming your brain is often working through your concerns, and so dreams can provide an insight into your worries and help come up with innovative solutions.

5) A small amount of alcohol before bedtime improves sleep quality: Nope. A nightcap can help you to fall asleep, but also causes you to spend less time in restorative deep sleep and having fewer dreams.

6) I can catch up on my lost sleep at the weekend: Nope. When you fail to get enough sleep you develop a sleep debt. Spending more time in bed for a day will help but won’t fully restore you for the coming week.

7) Eating cheese just before you go to bed gives you nightmares: Nope. The British Cheese Board asked 200 volunteers to spend a week eating some cheese before going to sleep and to report their dreams in the morning. None of them had nightmares.

So there we go. They are all myths! How did you score?

A few years ago I wrote Night School – one of the first modern-day books to examined the science behind sleep and dreaming. In a forthcoming blog post I will review some tips and tricks for making the most of the night. Meanwhile, what are your top hints and tips for improving your sleep and learning from your dreams?

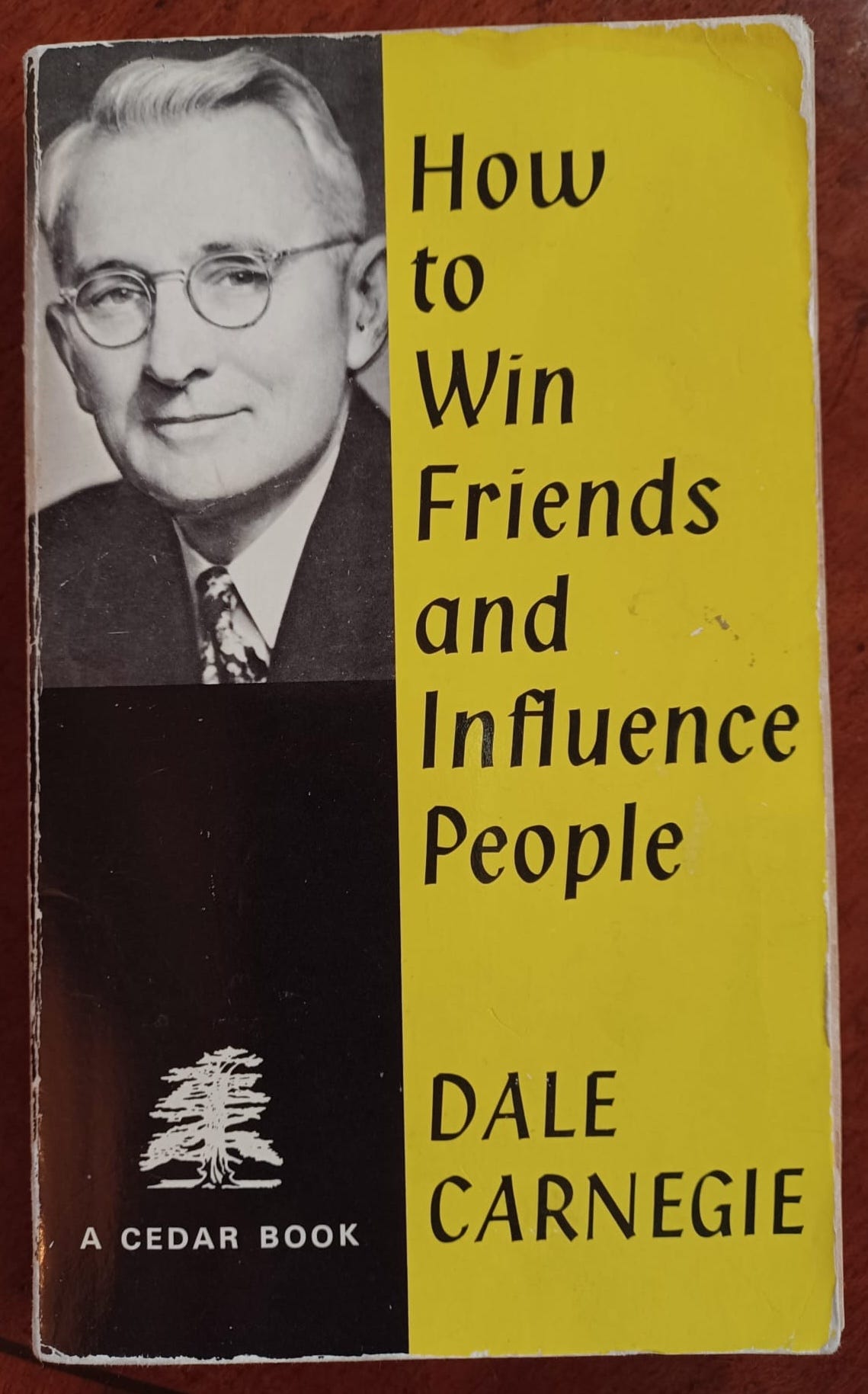

I am a huge fan of Dale Carnegie and mention him in pretty much every interview I give. Carnegie was American, born in 1888, raised on a farm, and wrote one of the greatest self-help books of all time, How to Win Friends and Influence People. The book has now sold over 30 million copies worldwide.

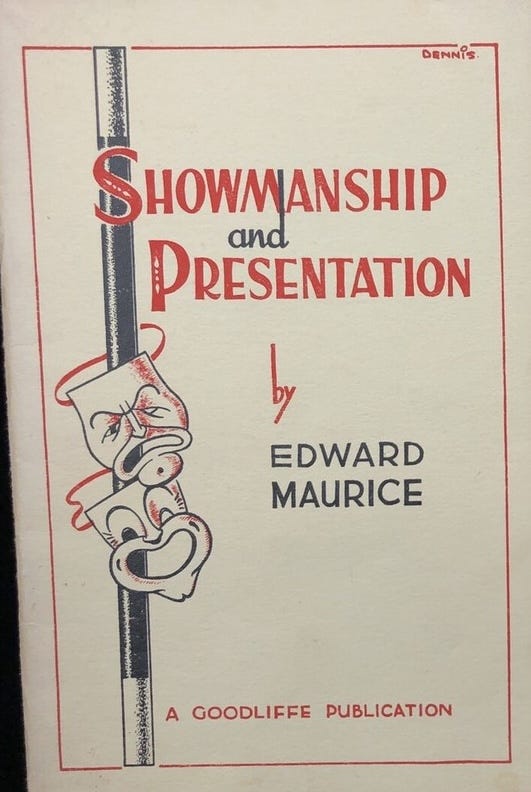

I first came across his work when I was about 10 years old and read this book on showmanship and presentation….

According to Edward Maurice, it’s helpful if magicians are likeable (who knew!), so he recommended that they read Carnegie’s book. I still have my original copy, and it’s covered in my notes and highlights.

One of my favourite — and wonderfully simple — pieces of advice is to smile more. Since the book was written, psychologists have discovered lots about the power of smiling. There is evidence that forcing your face into a smile makes you feel better (known as the facial feedback hypothesis). In addition, it often elicits a smile in return and, in doing so, makes others feel good too. As a result, people enjoy being around you. But, as Carnegie says, it must be a genuine smile, as fake grins look odd and are ineffective. Try it the next time you meet someone, answer the telephone, or open your front door. It makes a real difference.

In another section of the book, Carnegie tells an anecdote about a parent whose son went to university but never replied to their letters. To illustrate the importance of seeing a situation from another person’s point of view, Carnegie advised the parent to write a letter saying that they had enclosed a cheque — but to leave out the cheque. The son replied instantly.

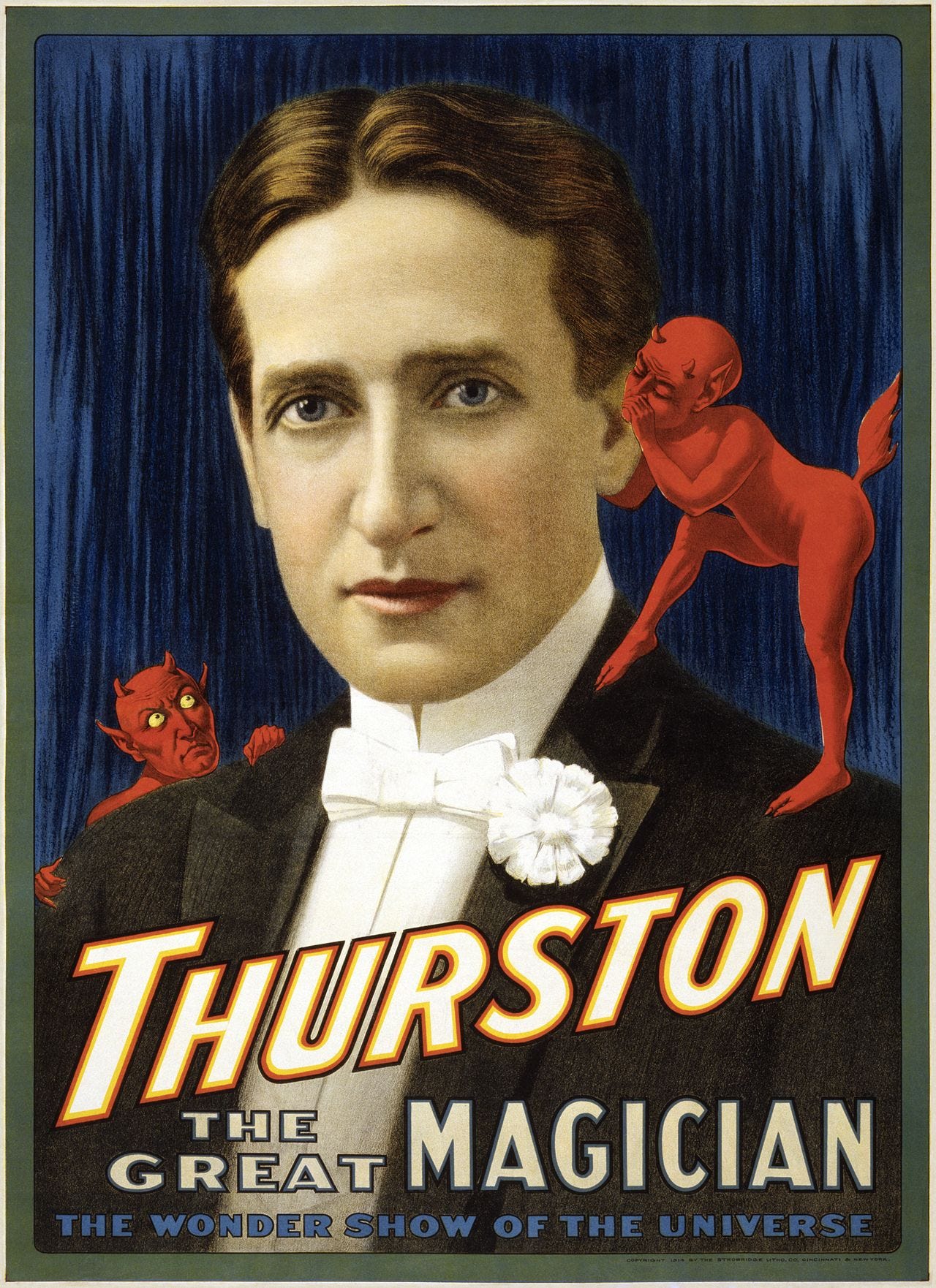

Then there is the power of reminding yourself how much the people in your life mean to you. Carnegie once asked the great stage illusionist Howard Thurston about the secret of his success. Thurston explained that before he walked on stage, he always reminded himself that the audience had been kind enough to come and see him. Standing in the wings, he would repeat the phrase, “I love my audience. I love my audience.” He then walked out into the spotlight with a smile on his face and a spring in his step.

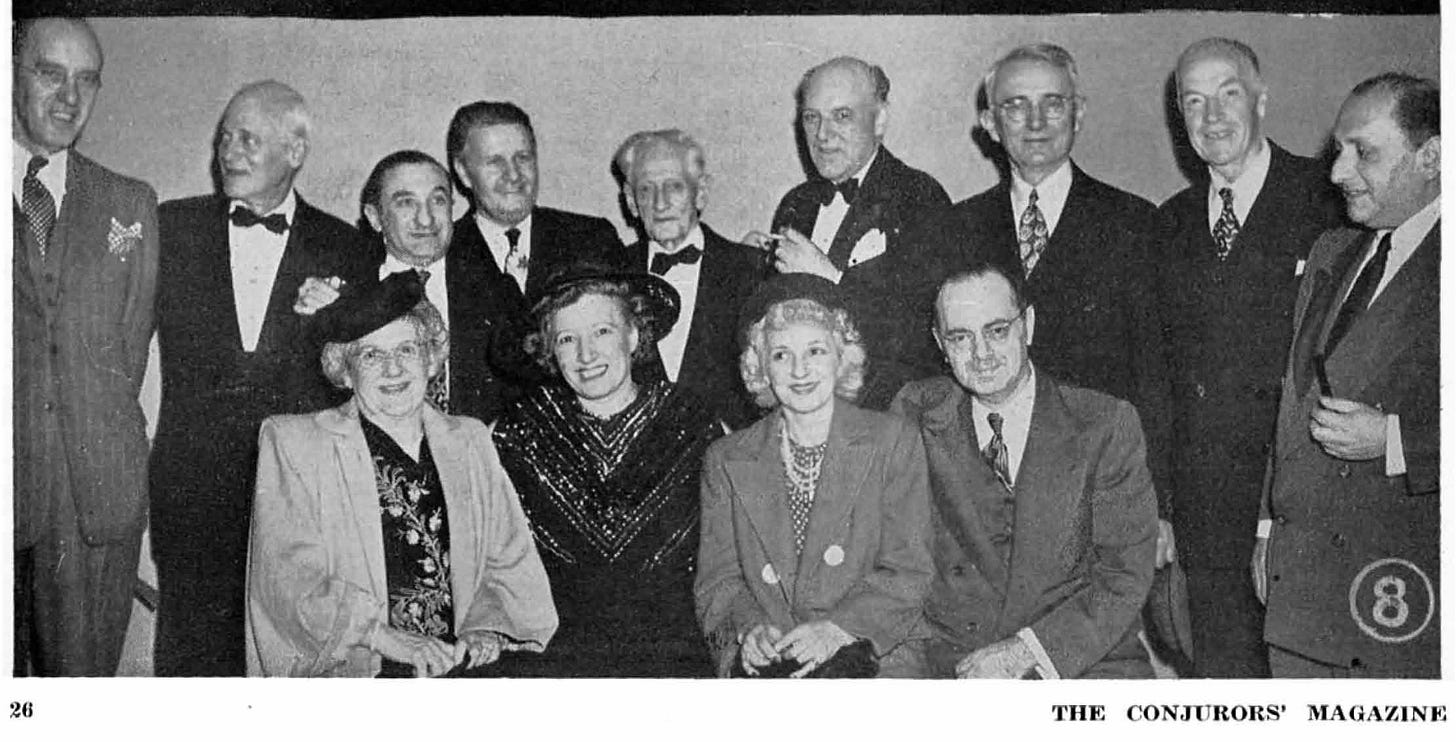

This is not the only link between Carnegie and magic. Dai Vernon was a hugely influential exponent of close-up magic and, in his early days, billed himself as Dale Vernon because of the success of Dale Carnegie (The Vernon Touch, Genii, April 1973). In addition, in 1947 Carnegie was a VIP guest at the Magicians’ Guild Banquet Show in New York. Here is a rare photo of the great author standing with several famous magicians of the day (from Conjurers Magazine, Vol. 3, No. 4; courtesy of the brilliant Lybrary.com).

Front row (left to right): Elsie Hardeen, Dell O’Dell, Gladys Hardeen, J. J. Proskauer

Back row: E. W. Dart, Terry Lynn, Al Flosso, Mickey MacDougall, Al Baker, Warren Simms, Dale Carnegie, Max Holden, Jacob Daley

If you don’t have a copy, go and get How to Win Friends and Influence People. Some of the language is dated now, but the thinking is still excellent. Oh, and there is an excellent biography of Carnegie by Steven Watts here.

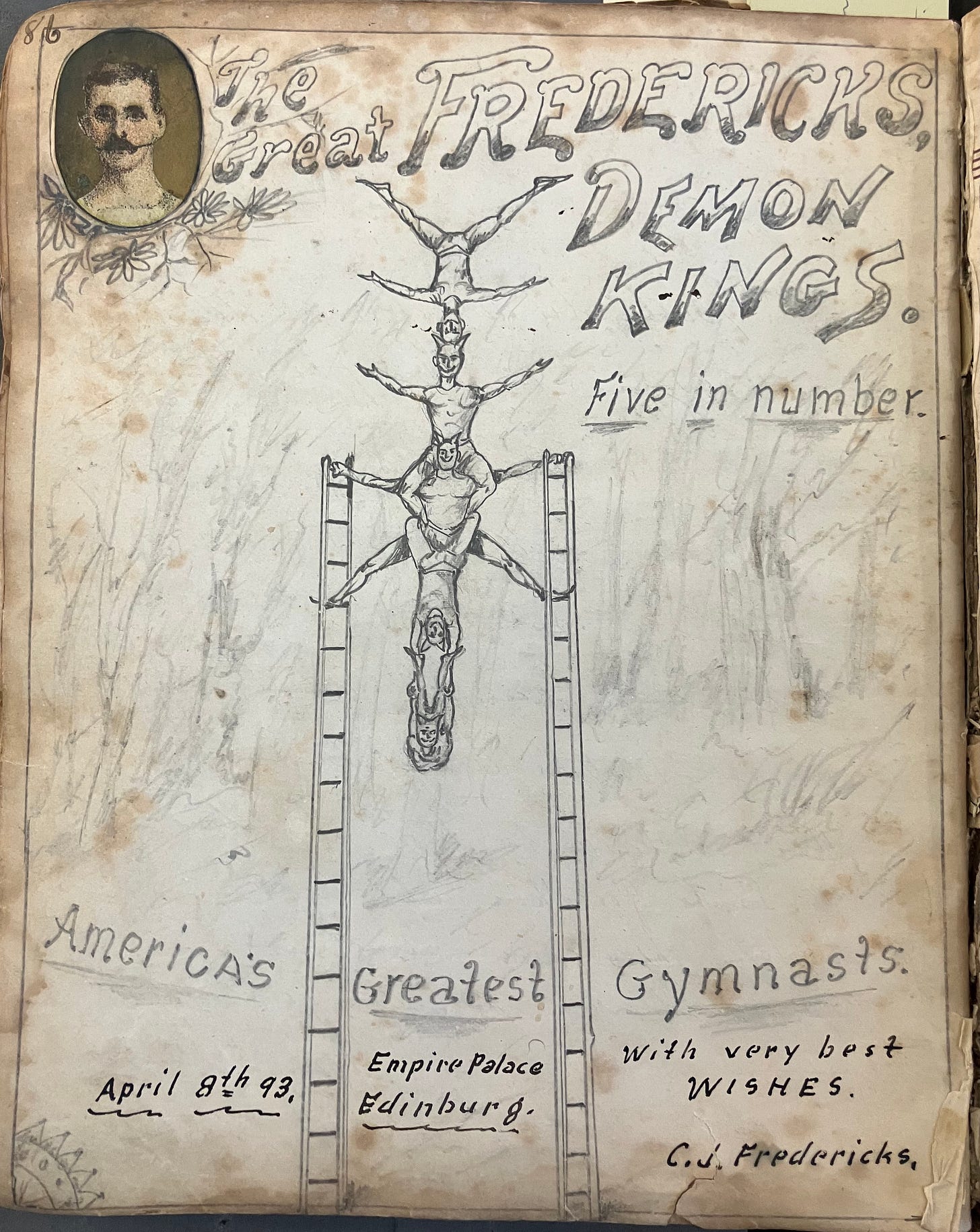

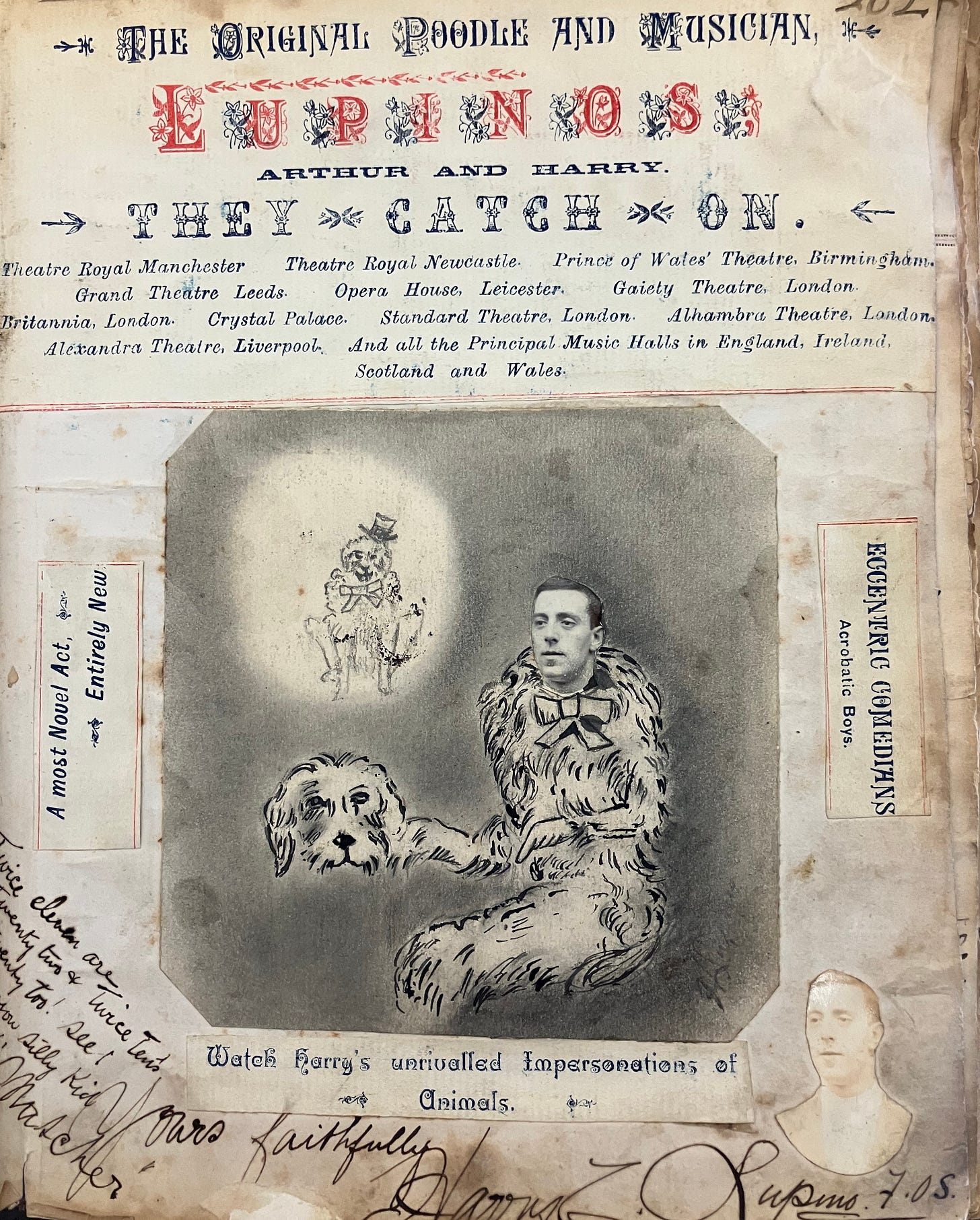

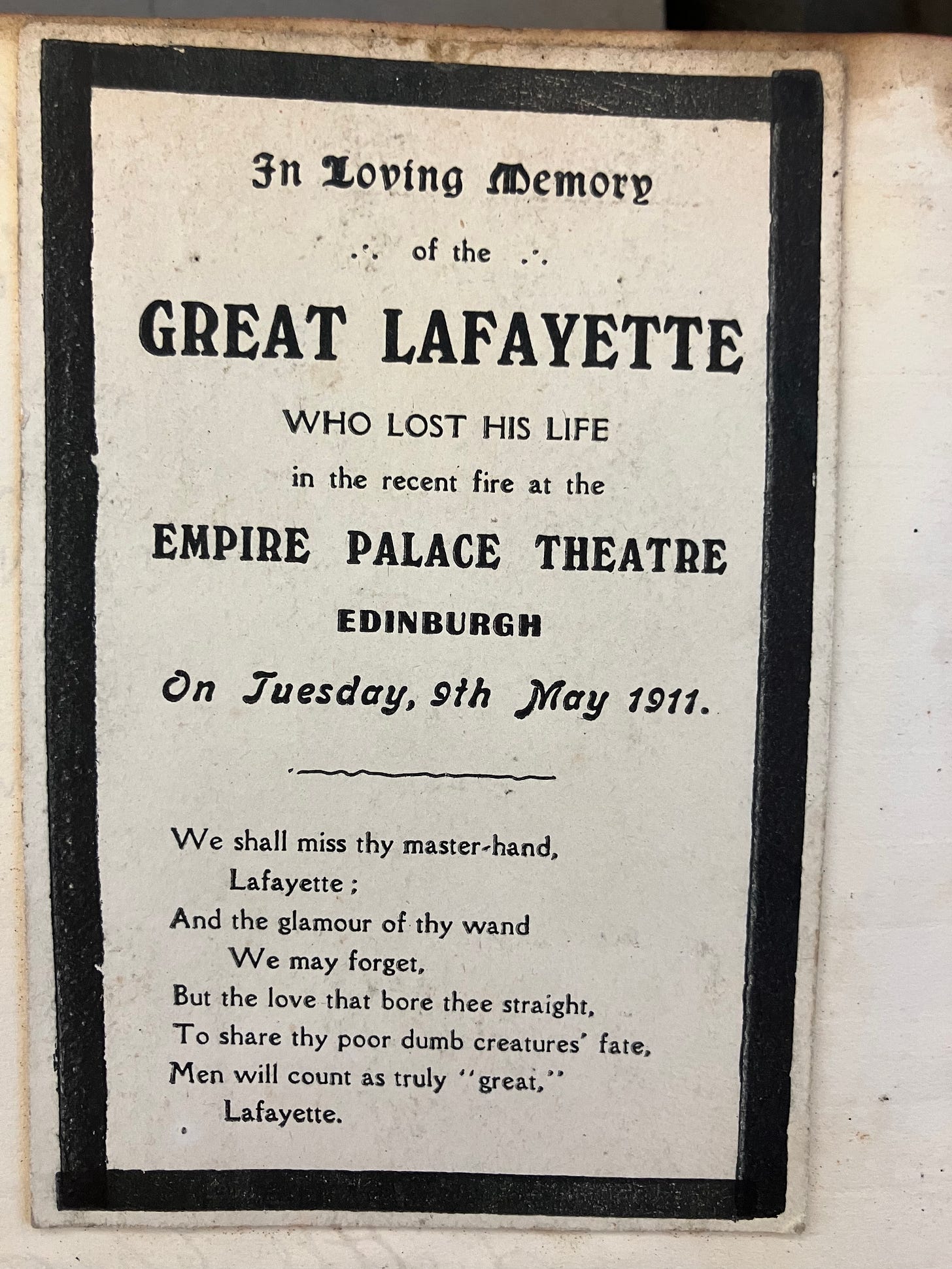

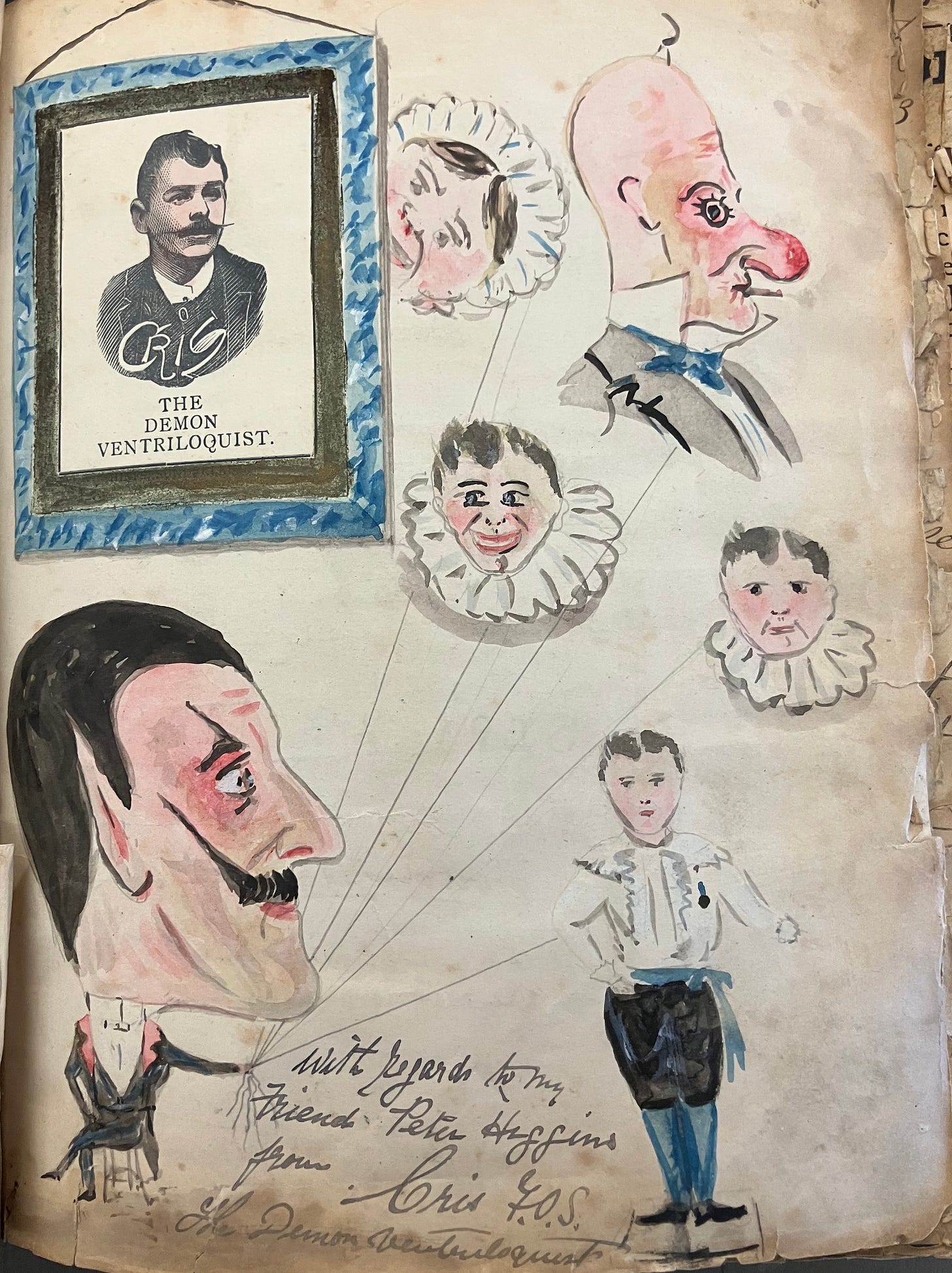

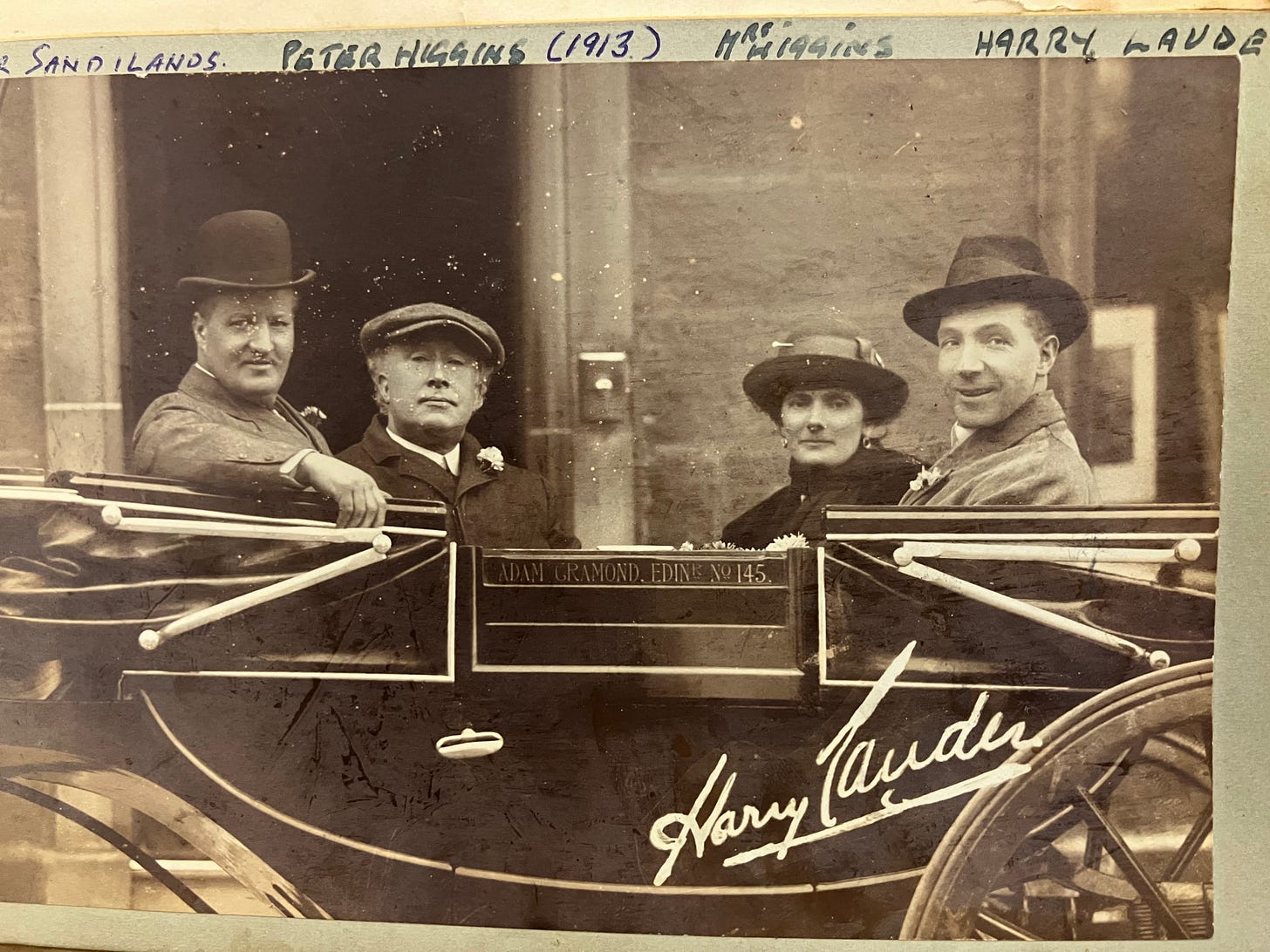

It’s time for some obscure magic and music hall history.

I’m a fan of a remarkable Scottish conjurer called Harry Marvello. He was performing during the early 1900s and built a theatre in Edinburgh that is now an amusement arcade. I have a previous post on Marvello here and have just written a long article about him for a magic history magazine called Gibecière (the issue is out soon).

Subscribe

Subscribe OPML

OPML